Testing Passage Of Time In Smart Contracts

October 15, 2021

Many ERC20 tokens allow for staking of other assets for a reward yield based on the amount of time staked. In smart contracts you can track the passage of time using block.timestamp which is the time since epoch measured in seconds. Measuring this kind of time to pay out yield is being used by contracts in De-fi as well as many NFT that are providing utility tokens for staking the NFT themselves!

We can create our own staking logic in smart contracts and test the rewards over passage of time easily by using OpenZeppelin Test Helpers. This is a package of helpers for hardhat tests that let you easily test things like reverting transactions, event emissions, as well as passage of time.

Here is a quick example of installing the package and creating a test that can fast forward time while staking assets!

1. Install packages

You will need to install the test helpers as well as the hardhat web3 integration package.

yarn add @openzeppelin/test-helpersand

yarn add @nomiclabs/hardhat-web3 web3

2. Import helpers

Put this at the beginning of your hardhat test file.

const {

BN, // Big Number support

constants, // Common constants, like the zero address and largest integers

expectEvent, // Assertions for emitted events

expectRevert, // Assertions for transactions that should fail

time

} = require('@openzeppelin/test-helpers');3. Add fast forwarding to your test

Here is a snippet of smart contract that uses a logged timestamp to determine the amount of time an asset has been staked and reward the staker with a rate based on that time. This is from the Anonymice $CHEETH Contract, that lets you stake NFT for $CHEETH tokens.

totalRewards =

totalRewards +

((block.timestamp - tokenIdToTimeStamp[tokenId]) *

EMISSIONS_RATE);

Here is a small snippet of a staking test that can fast forward the block time to increase and test the yield from the contract.

await cheethContract.stakeByIds([0,1])

//fast forward time by 50000 seconds

await time.increase(50000);

//unstake anonymice

await cheethContract.unstakeAll()

//check balance

var balance = await cheethContract.balanceOf(currentAddress.address)

//make assertion on balance based on time passed 4. Running on Hardhat

This part was a little tricky to figure out. The helpers won't be able to run on the integrated hardhat network without a few steps.

Run the hardhat integrated node:

npx hardhat nodeRun your hardhat tests pointed at localhost:

npx hardhat test --network localhostThis will let the test helpers interact with the test node.

Testing Price Feeds On Mainnet With Hardhat

October 01, 2021

When writing smart contracts that interact with price feed oracles or perform things like flash loans and flash swaps it can be useful to test your code using a mainnet fork. It is great as a final step in integration testing when you want to make sure your contract is calling all of the correct mainnet addresses, and make sure all of the testnet addresses are swapped out correctly. It is also convenient if you don't want to hunt down all the various testnet contract addresses for the feeds and AMMs you want to use.

Running tests on mainnet forks is easy using HardHat, a framework for developing and testing Solidity smart contracts. You can follow this guide to get a standard HardHat set up working.

To test on a fork of mainnet simply change your hardhat.config.ts to point at a mainnet RPC API endpoint using the forking directive:

module.exports = {

solidity: "0.8.7",

defaultNetwork: "hardhat",

networks: {

hardhat: {

forking:{

url: MAIN_API_URL,

// blockNumber: 11095000

}

}

}

}Including a block number will let your tests cache and execute faster.

We will test executing a chainlink price feed call for ETH/USD on our fork. here is the code for the consumer smart contract:

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.7;

import "@chainlink/contracts/src/v0.8/interfaces/AggregatorV3Interface.sol";

contract PriceConsumer {

AggregatorV3Interface internal priceFeed;

/**

* Network: Main

* Aggregator: ETH/USD

* Address: 0x5f4eC3Df9cbd43714FE2740f5E3616155c5b8419

*/

constructor() public {

priceFeed = AggregatorV3Interface(0x5f4eC3Df9cbd43714FE2740f5E3616155c5b8419);

}

/**

* Returns the latest price

*/

function getLatestPrice() public view returns (int) {

(

uint80 roundID,

int price,

uint startedAt,

uint timeStamp,

uint80 answeredInRound

) = priceFeed.latestRoundData();

return price;

}

}And here is the hardhat test code to deploy,call, and print the results of the oracle query on the mainnet fork:

import { ethers } from "hardhat";

import { beforeEach } from "mocha";

import { Contract,BigNumber } from "ethers";

import { SignerWithAddress, } from "@nomiclabs/hardhat-ethers/signers";

describe("Oracle Consumer", () => {

let priceCheck: Contract;

let owner: SignerWithAddress;

let address1: SignerWithAddress;

let address2: SignerWithAddress;

beforeEach(async () => {

[owner, address1,address2] = await ethers.getSigners();

const ChainLinkFactory = await ethers.getContractFactory(

"PriceConsumer"

);

priceCheck = await ChainLinkFactory.deploy()

});

it('Check last ETH price', async () =>{

console.log( ((await priceCheck.getLatestPrice())/(10**8)).toString())

})

});I will be posting some flash loan / flash swap code that utilizes this approach for real world testing!

Securing Your Mint Smart Contract Function

October 01, 2021

NFTs are all the rage and thanks to standards like ERC-721 and libraries OpenZeppellin anyone can get an NFT smart contract deployed to the Ethereum mainnet with relative ease.

A key part of any NFT drop is the minting function. This is the function that is used by outside users to generate their NFT from the ERC-721 compliant contract. This is usually a payable function that takes in a number of parameters like number of NFTs to mint and the address to send the NFT to.

Developers include a number of restrictions on these minting functions to make sure that the user has payed enough ETH for the number of NFTs they are trying to mint, as well as restrict the number of NFTs a user can mint per transaction. Developers have also tried things like using block time to prevent users from minting too many NFTs over a period of time.

Here is an example minting function from the Party Penguins NFT project contract:

function mint(address _to, uint256 num) public payable {

uint256 supply = totalSupply();

if(msg.sender != owner()) {

require(!_paused, "Sale Paused");

require( num < (_maxMint+1),"You can adopt a maximum of _maxMint Penguins" );

require( msg.value >= _price * num,"Ether sent is not correct" );

}

require( supply + num < MAX_ENTRIES, "Exceeds maximum supply" );

for(uint256 i; i < num; i++){

_safeMint( _to, supply + i );

}

}

The require statements are placing restrictions on the ability of the user to mint. When a require statement fails the entire transaction fails and is reverted, and none of the changes are made on the blockchain. This specific function restricts the number of NFTs the caller can mint as well as verifies the price the user has sent along with the call, and makes sure the caller isn't trying to mint more than the total supply of the collection. In this case the owner of the contract can bypass many of the restrictions.

Developers also usually provide a front end UI that lets users connect their wallet and enter the number of NFTs to mint. The UI will then calculate the amount of ETH the transaction will cost and send the transaction to be signed and executed by the user's wallet. Restrictions on NFT minting can also be enforced by the UI, BUT users can also interact with the mint function directly from the contract page on etherscan, so any restrictions need to be built into the mint function.

Of course attackers have taken advantage of a number of NFT minting functions to mint more than their fair share of NFTs.

One attack vector is by calling a mint function multiple times from another smart contract. All public functions, which minting functions are, can be called by any contract that knows the function signature and address of the contract. Attackers can bundle a bunch of mint function calls together and then use things like flashbots to push their exploiting transactions through with low gas prices and avoiding any mitigating efforts by the NFT contract owner, like "pausing" the mint as the Party Penguins contract can do.

Here is an exampe attacker contract that I created for testing:

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.0;

interface TargetInterface {

function mint(address _to, uint256 num) external payable;

}

contract MintAttacker {

TargetInterface _target;

constructor(address toAttack) {

_target = TargetInterface(toAttack);

}

function attackMint(uint256 count) public payable {

for(uint256 i = 0;i<count;i++){

_target.mint{value: msg.value/count}(msg.sender,1);

}

}

}

It takes in the address of the victim contract and calls the mint function multiple times. This can be used to get around mint count and time restrictions in the victim contract, as well as save gas by executing these from a contract.

We can protect our mint function by adding a modifier that prevents the function from being called by contracts. A solidity modifier augments another function. Normal functions can be annotated with a modifier which can then execute code either before or after the annotated function:

Here is the onlyOwner modifier provided by the OpenZeppelin library:

modifier onlyOwner() {

require(owner() == _msgSender(), "Ownable: caller is not the owner");

_;

}We can then annotate any function with onlyOwner and it will verify that the caller of the annotated function is the owner of the contract.

To help protect our mint function from being attacked by other contracts I created a validAddress modifier that checks that the origin of the transaction is the sender of the function call.

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.0;

abstract contract MintSecure {

modifier validAddress() {

require(tx.origin == msg.sender, "MintSecure: caller cannot be a smart contract");

_;

}

}

tx.origin is the caller of the initial function, it will be passed down to each function call within the initiating function. In our attacker's case this will be the wallet address calling attackMint. msg.sender is the sender of the function call which in the case of _target.mint{value: msg.value/count}(msg.sender,1); is the address of the attacker contract.

validAddress simply makres sure the function caller is the same as the transaction origin. This prevents smart contracts from calling functions on other smart contracts!

To use this modifier you simply annotate your mint function with: validAddress:

function mint(address _to, uint256 num) public payable validAddress() {

//your mint logic

}

Of course there are many ways around this modifier. An attacker can simply run the mint() function loop code through javascript scripting locally instead of using a smart contract.

I may expand on this post in the future with other techniques to prevent exploitation of mint functions

Genesis Post: Building and deploying a blog about web3 on web3

September 30, 2021

I created this to document my journey through learning web3. I thought what better way to get started than to build and deploy this blog using web3 technologies.

Here is a quick rundown of some of the web3 infrastructure we will be using to host our blog:

IPFS stands for interplanetary file system and is a distributed peer to peer file storage and web server. Anyone can upload content to IPFS and have it served worldwide without worrying about centralized servers and authorities.

A user can upload content to an IPFS node and get a hash in return which can be referenced using the ipfs:// protocol on that node. To make the file available permanently on the broad IPFS system the user can "pin" the content which means distributing it to more than one node. Once the content is pinned it can be referenced worldwide and is distributed to all the nodes it has been pinned to.

ENS stands for Ethereum Name Service and is a decentralized information look up system that lets you link information like ethereum wallet addresses, IPFS content hashes, and other contact information to an easy the understand address that ends in ".eth" Similar to DNS and normal domain namesm except ENS is built on the ethereum blockchain and is operated by Smart Contracts.(more on those in another post) An ens name can have unlimited subdomains, which we will be using to link to our blog.

Steps for building our blog:

- Build a staticly generated blog using Gatsby

- Deploy blog to IPFS

- Link an ENS subdomain to the IPFS hash

I chose Gatsby, a react-based static site generator, for my blogging engine because I have some familiarity with it, but ran into a couple of issues when trying to serve the blog off of IPFS. I followed the guide on the gatsby site to build the basic blog engine and added plugins for things like syntax highlighting and Tailwind CSS. This link was useful for getting tailwind and markdown to style properly

One important plugin that is needed for Gatsby to work with IPFS is gatsby-plugin-ipfs. Gatsby does not support relative paths for assets so when you upload your site to IPFS all links to javascript assets and images will break. This plugin sets a relative path for your assets automatically. There are also issues with navigation when serving from IPFS which the gatsby-plugin-catch-links plugin will alleviate.

Here is my gatsby-config.js with the various plugins I needed to install to get it working.

module.exports = {

siteMetadata: {

siteUrl: "https://blog.thewalkingcity.eth.link",

title: "Web3 Blog",

},

pathPrefix: '__GATSBY_IPFS_PATH_PREFIX__',

plugins: [

`gatsby-transformer-sharp`,

`gatsby-plugin-sharp`,

`gatsby-plugin-postcss`,

"gatsby-plugin-catch-links",

"gatsby-plugin-react-helmet",

{

resolve: "gatsby-transformer-remark",

options: {

plugins: [

`gatsby-remark-images-anywhere`,

{

resolve: `gatsby-remark-prismjs`,

options: {

classPrefix: "language-",

inlineCodeMarker: null,

aliases: {},

showLineNumbers: false,

noInlineHighlight: false,

},

}

]

}

},

{

resolve: "gatsby-source-filesystem",

options: {

name: "images",

path: "./src/images/",

},

__key: "images",

},

{

resolve: "gatsby-source-filesystem",

options: {

name: "pages",

path: "./src/pages/",

},

__key: "pages",

},

'gatsby-plugin-ipfs',

],

};

Once I had my blog building it's public/ directory properly I could then start integrating with web3!

Integrating with web3!

There are many different approaches to getting your blog on IPFS and linked to ENS. I will go through the very manual process and then link to a completely automated process.

Manual Process

- Download, configure and run an IPFS node

- Upload your public directory to your node

- Pin the the the hash to your blog directory to a remote pinning service (Pinata)

- Configure an ENS subdomain to point at the pinned ipns location

1. Configure IPFS

We will start by installing the IPFS command-line client. go here and follow the install instructions for your platform. Start by initializing your IPFS repository

$ ipfs initYou can view a quick start file by using the cat command

$ ipfs cat ipfs cat /ipfs/QmQPeNsJPyVWPFDVHb77w8G42Fvo15z4bG2X8D2GhfbSXc/quick-startWe will want to get our daemon started before adding files so they can be accessed by peers in the node:

$ ipfs daemonOnce the daemon is started we can view a list of peers using:

$ ipfs swarm peers

2. Upload blog public directory to IPFS

Now we can finally upload our blog to the IPFS network use the following commands to build and upload your Gatsby blog

$ yarn buildand

$ ipfs add -r public/Once your directory is finished uploading you can access your blog by taking the hash from the last line of the output:

added QmSXbxPmcFpHacHMjg5bwgqK7bmARLuWZkNqoVKSNuwzLW public

By visiting this link: https://ipfs.io/ipfs/QmSXbxPmcFpHacHMjg5bwgqK7bmARLuWZkNqoVKSNuwzLW

Save that hash for the next step!

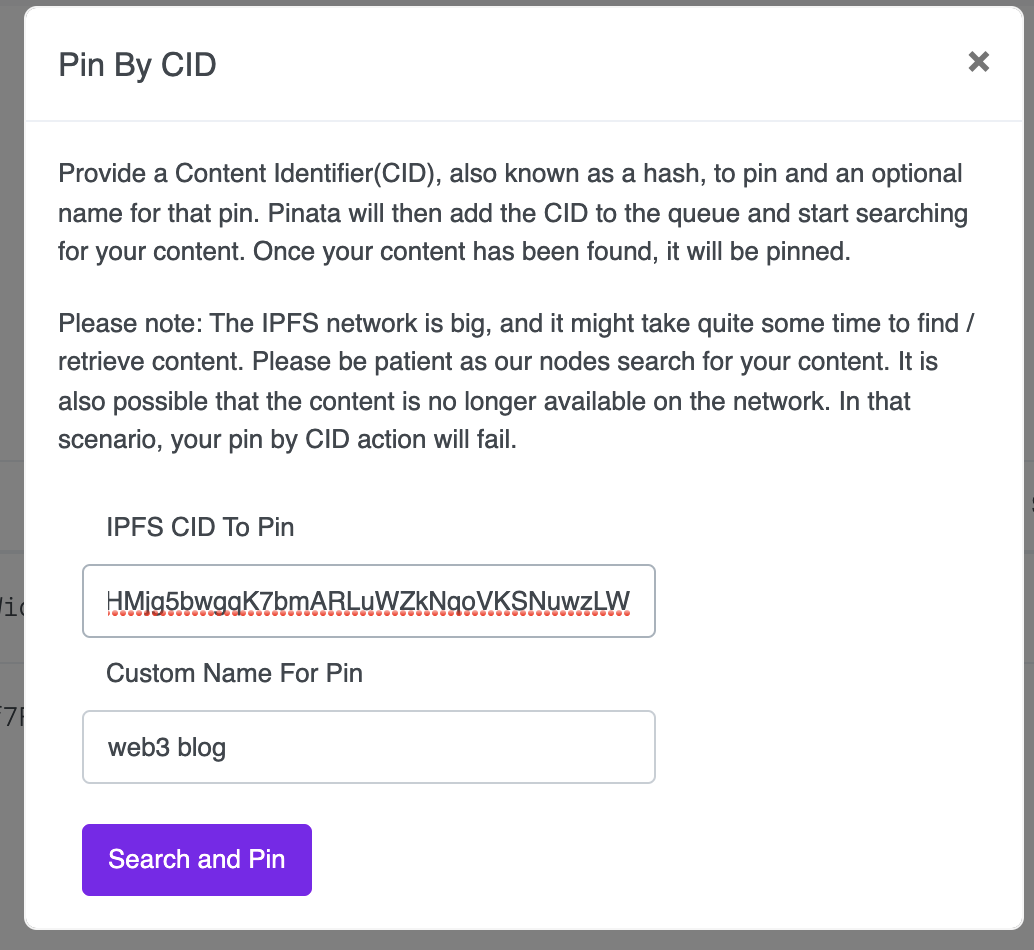

3. Pin CID Hash of your deployed blog

There are a number of services to pin your IPFS files to the network allowing them to be accessed wordwide (even when your node is turned off) The most popular is Pinata.

Sign up for a free account and once logged in you can simply tap the Upload button and select CID. You will be presented a screen where you can entrer the IPFS hash from the previous step!

This will add your CID to the pinning queue and eventually your blog will be pinned.

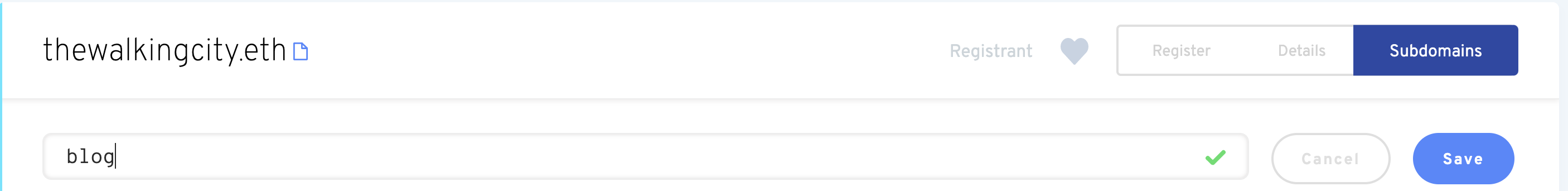

4. Link ENS subdomain to your pinned files

The next step is linking your ENS subdomain to your IPFS pinned directory. I am going to assume you have already signed up for an ENS name. You can follow directions for buying one here.

First go to your ENS name main page and tap on the subdomains tab. Tap the Add subdomain button and enter a subdomain for your blog.

Adding a subdomain is a Smart Contract write interaction so it will cost gas to perform.

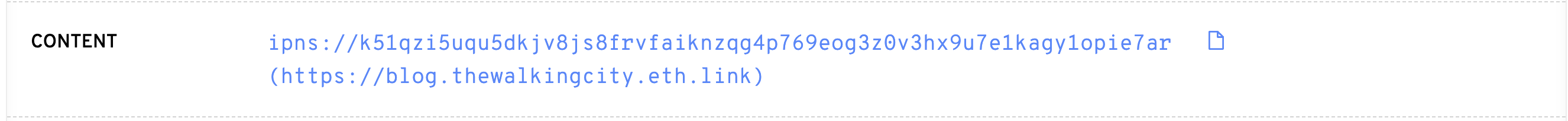

Once you have signed the transaction in your Ethereum wallet you can configure the sudomain to point to your pinned hash

This is also a contract interaction and will cost gas.

Once it is set you will be able to request an SSL certificate for your ENS subdomain

You are now all set up with IPFS and ENS!

You can share your blog with other people by using the ".link" domain provided by CloudFlare.

Your link will look like this: https://blog.thewalkingcity.eth.link

You can also install browser extensions that will automatically resolve all IPFS and ENS names.

Anytime you update your blog you will need to perform the upload/pin/link process and pay the gas fees involved.

This can be expensive if you use static site generators and update often, but there are services like fleek that will automate the process and even pay the gas fee for linking your ENS subdomain for free! (this is actually what I use for this blog 🤭)

Here are some guides for getting set up on fleek:

Fleek also lets you link standard DNS names to your site when you are having issues with eth links.